bagging machine learning ensemble

Please submit an issue or open a PR. Myself Shridhar Mankar a Engineer l YouTuber l Educational Blogger l Educator l Podcaster.

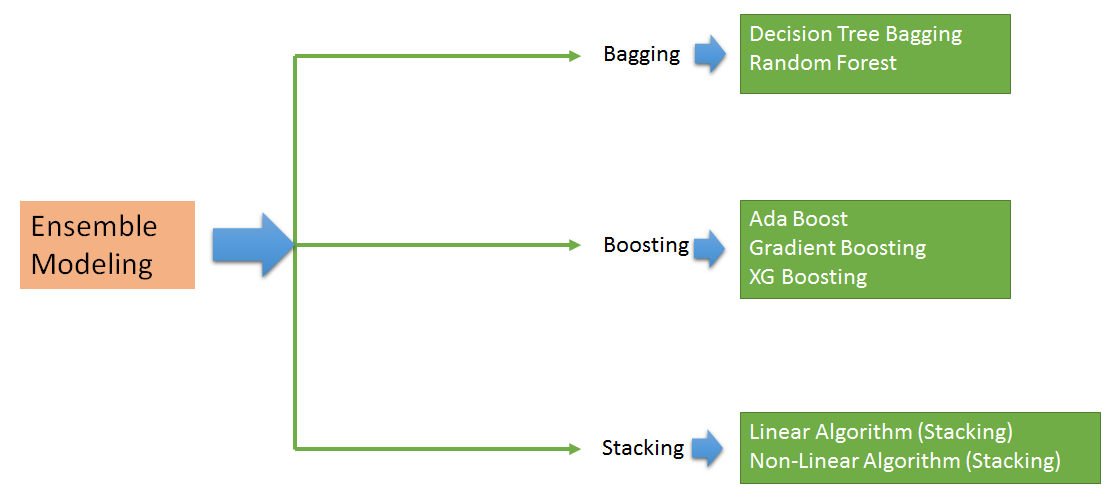

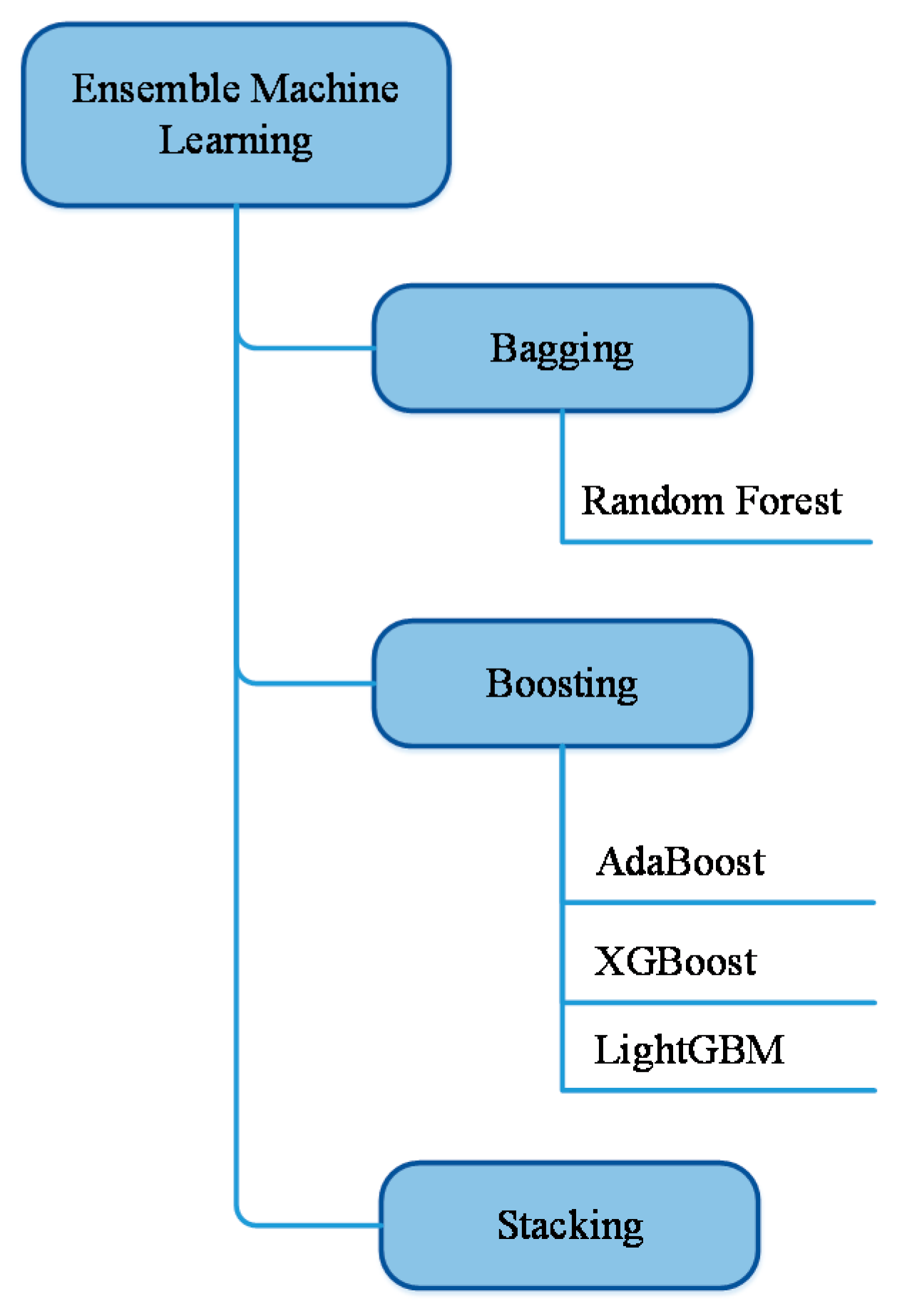

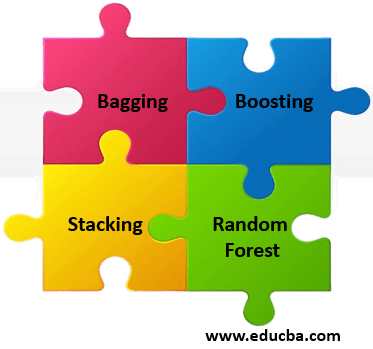

Ensemble Methods In Machine Learning Bagging Boosting And Stacking

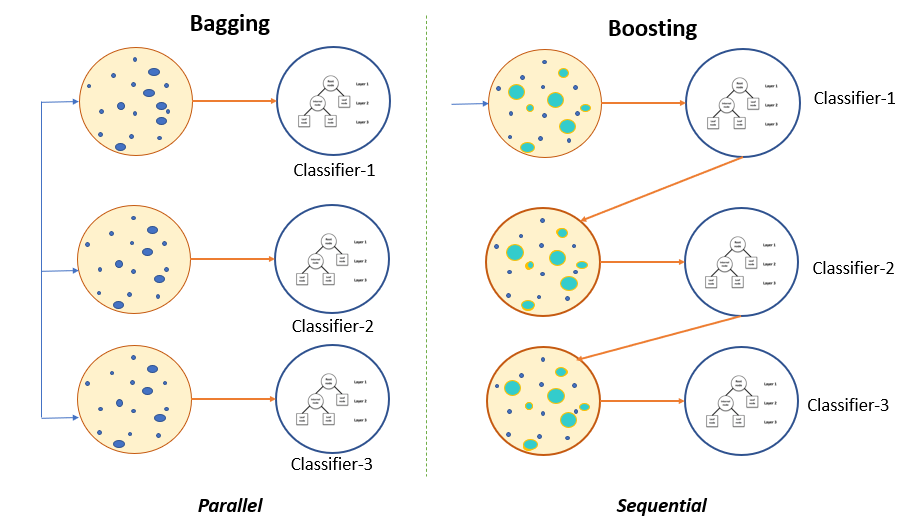

Bagging and Boosting make random sampling and generate several training.

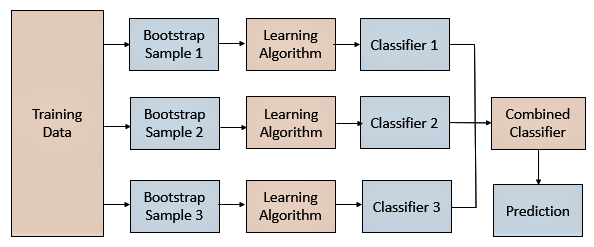

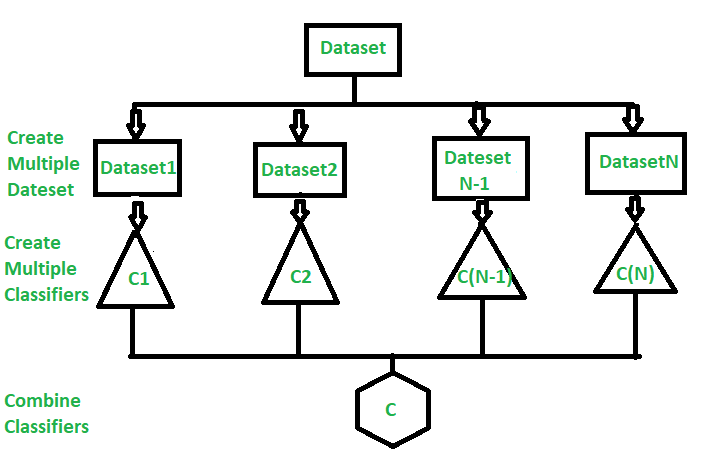

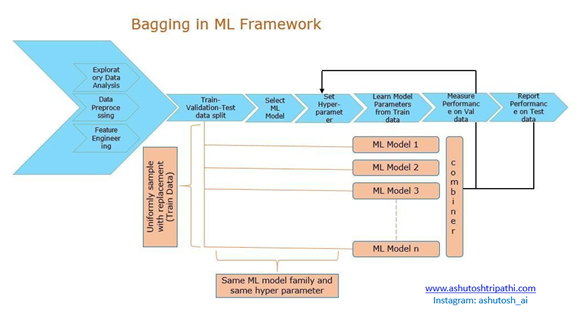

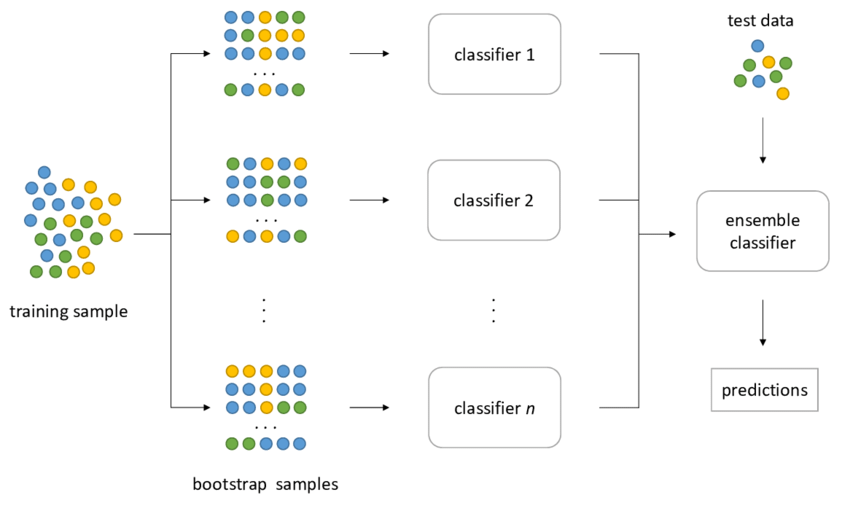

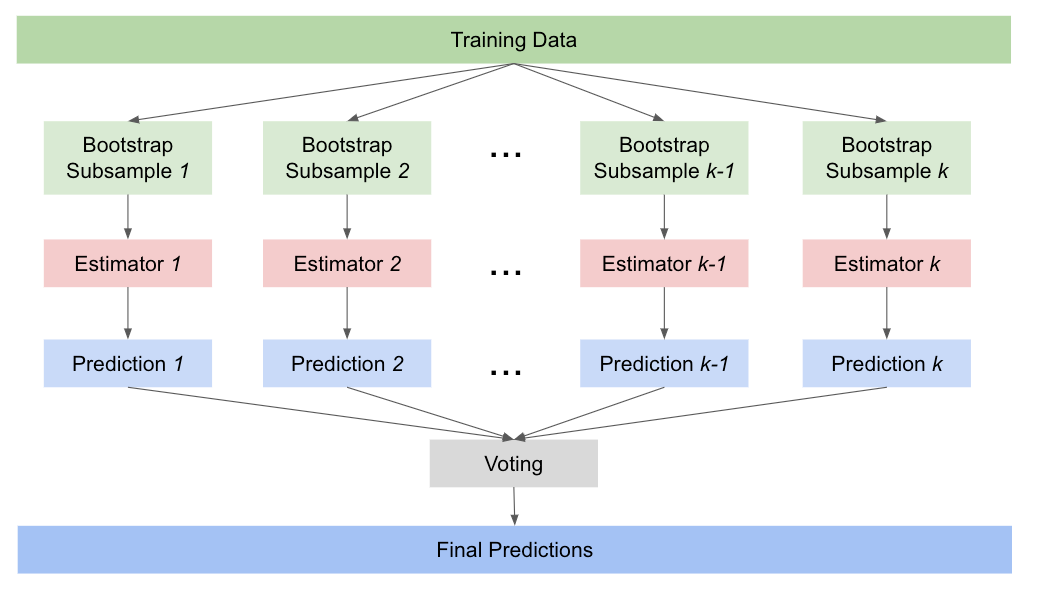

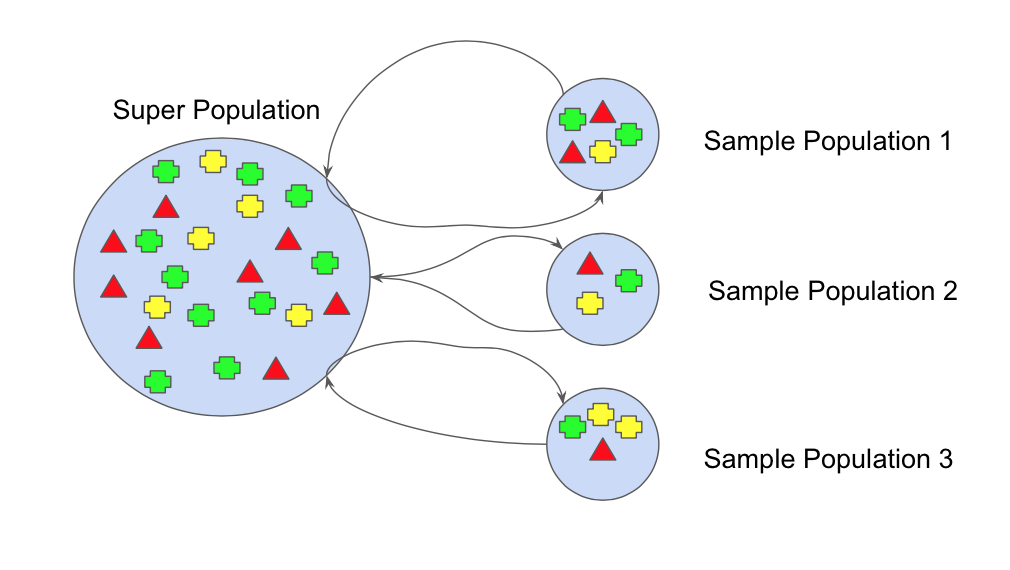

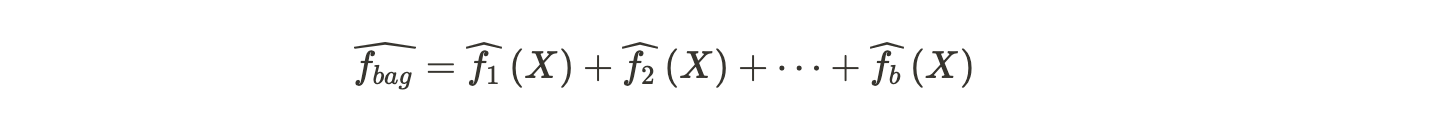

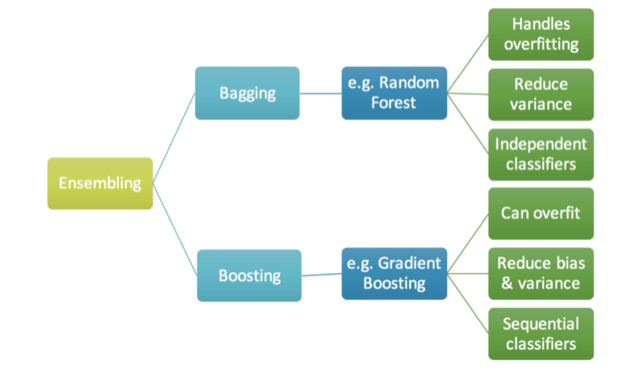

. Previous researches have shown that. Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning. An ensemble consists of a set of individually trained base learnersmodels whose predictions are combined when classifying new cases.

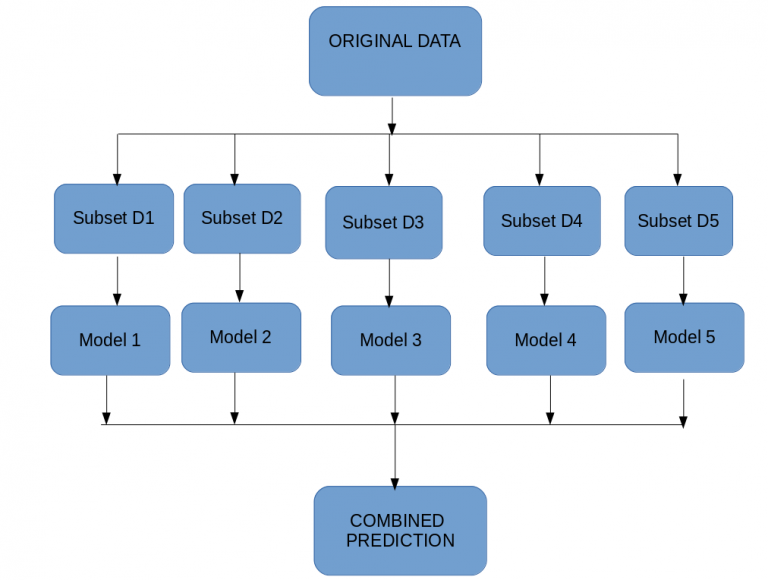

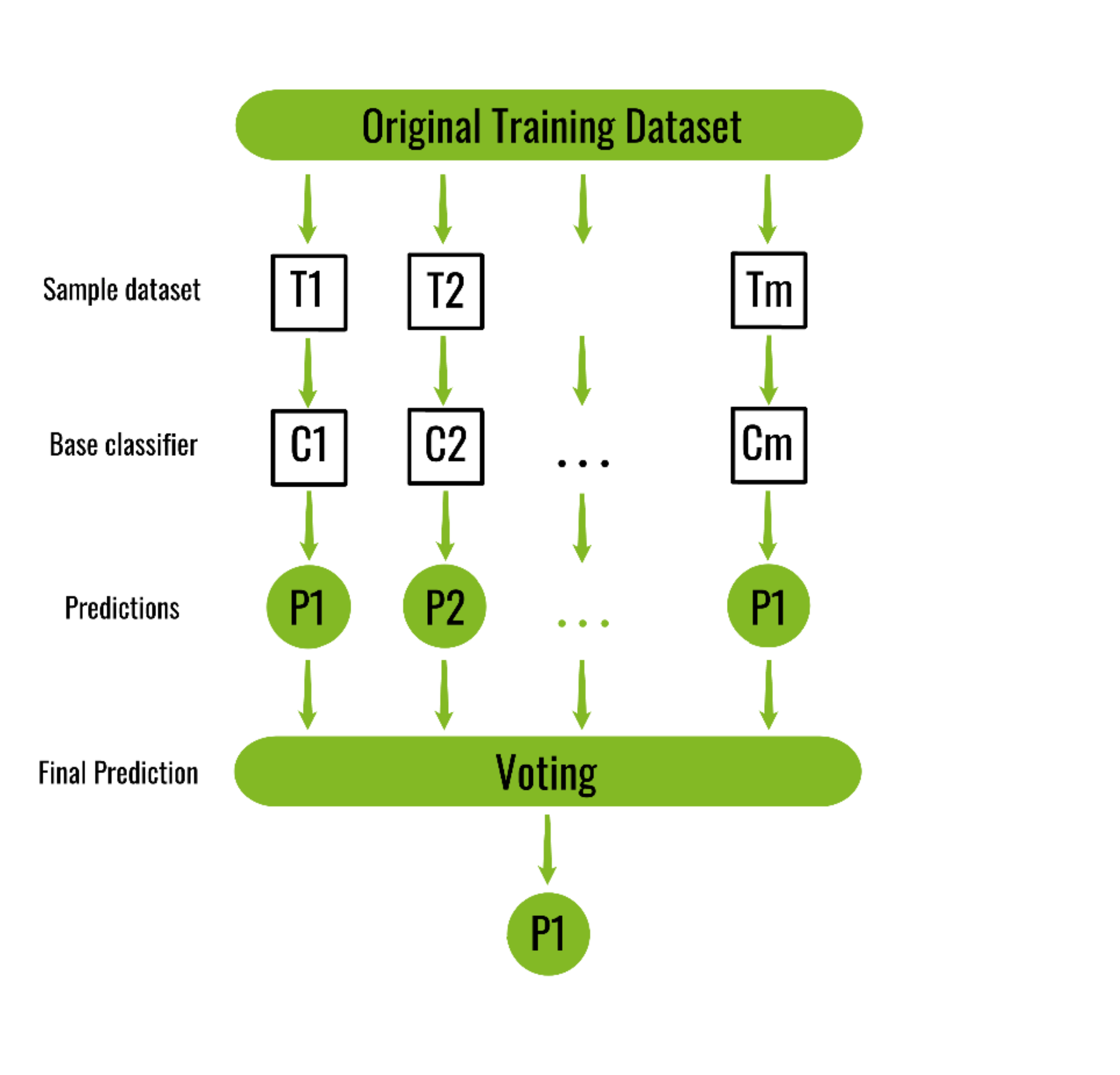

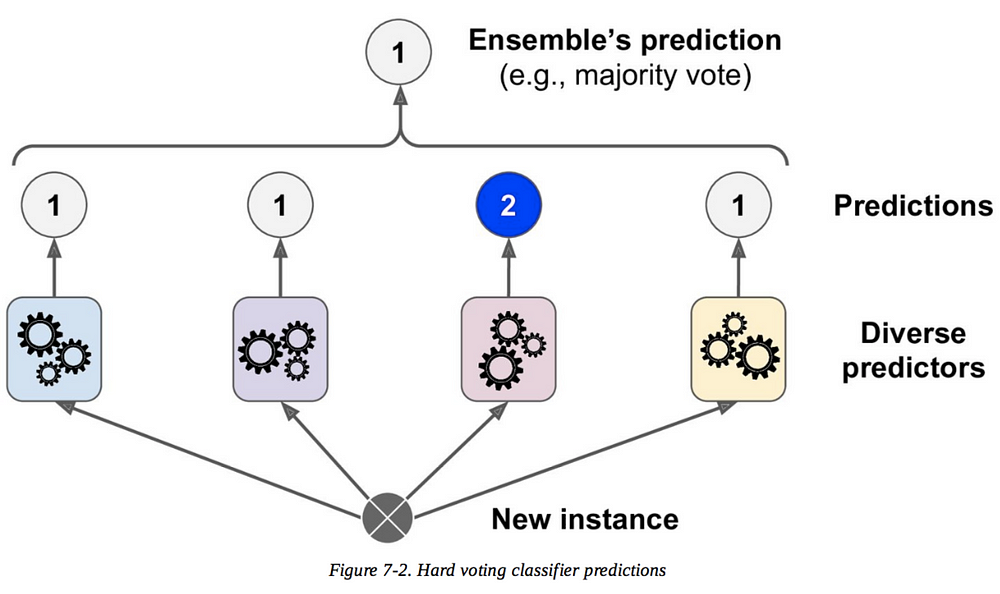

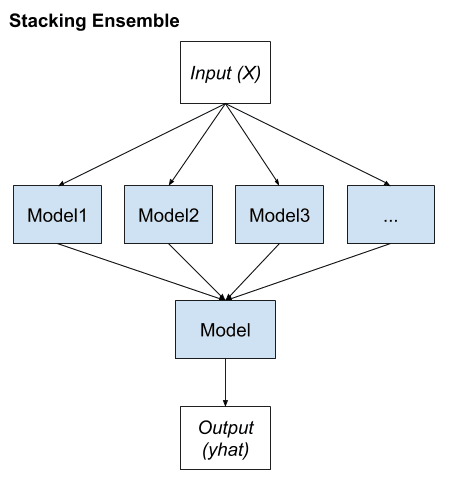

A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions either by voting. These are built with a given learning algorithm in order to. Ensemble learning is a machine learning paradigm where multiple models often.

Ensemble learning is all about using multiple models to combine their prediction power to get better predictions that has low variance. Bagging also known as Bootstrap Aggregating is an ensemble method to improve the stability and accuracy of machine learning models. In this work an ensemble learning algorithm for predicting HIV-1 PR cleavage sites namely EM-HIV is proposed by training a set of weak learners ie biased support vector.

The bagging technique is useful for both regression and statistical classification. Bagging and Boosting are ensemble methods focused on getting N learners from a single learner. Ensemble machine learning can be mainly categorized into bagging and boosting.

Machine Learning Trading Ensemble Learners Bagging and Boosting 4 minute read Notice a tyop typo. Bagging Vs Boosting In Machine Learning. Floods one of the most common natural hazards globally are challenging to anticipate and estimate accurately.

It is used for minimizing variance and. Bagging and boosting. Bagging is the type of Ensemble Technique in which a single training algorithm is used on different subsets of the training data where the subset sampling is done with replacement.

This study aims to demonstrate the predictive ability of. It is done by building a model by using weak. My Aim- To Make Engineering Students Life EASYWebsite - https.

Machine learning is a sub-part of Artificial Intelligence that gives power to models to learn on their own by using algorithms and models without being explicitly designed by. Ensemble Learners Bagging and Boosting. The general principle of an ensemble method in Machine Learning to combine the predictions of several models.

Ensemble Classifier Data Mining Geeksforgeeks

What Is Bagging In Ensemble Learning Data Science Duniya

Ensemble Learning Bootstrap Aggregating Bagging And Boosting Youtube

Bagging In Financial Machine Learning Sequential Bootstrapping Python Example

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

A Primer To Ensemble Learning Bagging And Boosting

How To Create A Bagging Ensemble Of Deep Learning Models In Keras

Ml Bagging Classifier Geeksforgeeks

Ensemble Learning 5 Main Approaches Kdnuggets

Ensemble Methods Explained In Plain English Bagging Towards Ai

What Is The Difference Between Bagging And Boosting Quantdare

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

Introduction To Bagging And Ensemble Methods Paperspace Blog

Ensemble Methods Xa0 Machine Learning Quick Reference Book

Mathematics Free Full Text A Comparative Performance Assessment Of Ensemble Learning For Credit Scoring Html

An Intro To Ensemble Learning In Machine Learning By Priyankur Sarkar Medium

Difference Between Bagging And Boosting Ensemble Method Buggy Programmer

A Gentle Introduction To Ensemble Learning Algorithms

Ensemble Methods In Machine Learning 4 Types Of Ensemble Methods